On the limits of theorems, the sociology of prizes, and the slow work of intellectual maturity

When I re-read two older posts of mine on Bell’s Theorem — one written in 2020, at a moment when my blog was gaining unexpected traction, and another written in 2023 in reaction to what I then experienced as a Nobel Prize award controversy — I feel a genuine discomfort.

Not because I think the core arguments were wrong.

But because I now see more clearly what was doing the talking.

There is, in both texts, a mixture of three things:

- A principled epistemic stance (which is still there);

- A frustration with institutional dynamics in physics (also there);

- But, yes, also a degree of rhetorical impatience that no longer reflects how I want to think — or be read.

This short text is an attempt to disentangle those layers.

1. Why I instinctively refused to “engage” with Bell’s Theorem

In the 2020 post, I wrote — deliberately provocatively — that I “did not care” about Bell’s Theorem. That phrasing was not chosen to invite dialogue; it was chosen to draw a boundary. At the time, my instinctive reasoning was this:

Bell’s Theorem is a mathematical theorem. Like any theorem, it tells us what follows if certain premises are accepted. Its physical relevance therefore depends entirely on whether those premises are physically mandatory, or merely convenient formalizations.

This is not a rejection of mathematics. It is a refusal to grant mathematics automatic ontological authority.

I was — and still am — deeply skeptical of the move by which a formal result is elevated into a metaphysical verdict about reality itself. Bell’s inequalities constrain a particular class of models (local hidden-variable models of a specific type). They do not legislate what Nature must be. In that sense, my instinct was aligned not only with Einstein’s well-known impatience with axiomatic quantum mechanics, but also with Bell himself, who explicitly hoped that a “radical conceptual renewal” might one day dissolve the apparent dilemma his theorem formalized.

Where I now see a weakness is not in the stance, but in its expression. Saying “I don’t care” reads as dismissal, while what I really meant — and should have said — is this:

I do not accept the premises as ontologically compulsory, and therefore I do not treat the theorem as decisive.

That distinction matters.

2. Bell, the Nobel Prize, and a sociological paradox

My 2023 reaction was sharper, angrier, and less careful — and that is where my current discomfort is strongest.

At the time, it seemed paradoxical to me that:

- Bell was once close to receiving a Nobel Prize for a theorem he himself regarded as provisional,

- and that nearly six decades later, a Nobel Prize was awarded for experiments demonstrating violations of Bell inequalities.

In retrospect, the paradox is not logical — it is sociological.

The 2022 Nobel Prize did not “disprove Bell’s Theorem” in a mathematical sense. It confirmed, experimentally and with great technical sophistication, that Nature violates inequalities derived under specific assumptions. What was rewarded was experimental closure, not conceptual resolution.

The deeper issue — what the correlations mean — remains as unsettled as ever.

What troubled me (and still does) is that the Nobel system has a long history of rewarding what can be stabilized experimentally, while quietly postponing unresolved interpretational questions. This is not scandalous; it is structural. But it does shape the intellectual culture of physics in ways that deserve to be named.

Seen in that light, my indignation was less about Bell, and more about how foundational unease gets ritualized into “progress” without ever being metabolized conceptually.

3. Authority, responsibility, and where my anger really came from

The episode involving John Clauser and climate-change denial pushed me from critique into anger — and here, too, clarity comes from separation.

The problem there is not quantum foundations.

It is the misuse of epistemic authority across domains.

A Nobel Prize in physics does not confer expertise in climate science. When prestige is used to undermine well-established empirical knowledge in an unrelated field, that is not dissent — it is category error dressed up as courage.

My reaction was visceral because it touched a deeper nerve: the responsibility that comes with public authority in science. In hindsight, folding this episode into a broader critique of Bell and the Nobel Prize blurred two distinct issues — foundations of physics, and epistemic ethics.

Both matter. They should not be confused.

4. Where I stand now

If there is a single thread connecting my current thinking to these older texts, it is this:

I am less interested than before in winning arguments, and more interested in clarifying where different positions actually part ways — ontologically, methodologically, and institutionally.

That shift is visible elsewhere in my work:

- in a softer, more discriminating stance toward the Standard Model,

- in a deliberate break with institutions and labels that locked me into adversarial postures,

- and in a conscious move toward reconciliation where reconciliation is possible, and clean separation where it is not.

The posts on Bell’s Theorem were written at an earlier stage in that trajectory. I do not disown them. But I no longer want them to stand without context.

This text is that context.

Final notes

1. On method and collaboration

Much of the clarification in this essay did not emerge in isolation, but through extended dialogue — including with an AI interlocutor that acted, at times, less as a generator of arguments than as a moderator of instincts: slowing me down, forcing distinctions, and insisting on separating epistemic claims from emotional charge. That, too, is part of the story — and perhaps an unexpected one. If intellectual maturity means anything, it is not the abandonment of strong positions, but the ability to state them without needing indignation to carry the weight. That is the work I am now trying to do.

It is also why I want to be explicit about how these texts are currently produced: they are not outsourced to AI, but co-generated through dialogue. In that dialogue, I deliberately highlight not only agreements but also remaining disagreements — not on the physics itself, but on its ontological interpretation — with the AI agent I currently use (ChatGPT 5.2). Making those points of convergence and divergence explicit is, I believe, intellectually healthier than pretending they do not exist.

2. On stopping, without pretending to conclude

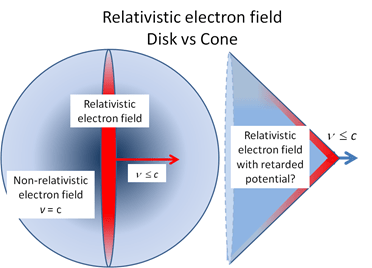

This post also marks a natural stopping point. Over the past weeks, several long-standing knots in my own thinking — Bell’s Theorem (what this post is about), the meaning of gauge freedom, the limits of Schrödinger’s equation as a model of charge in motion, or even very plain sociological considerations on how sciences moves forward — have either been clarified or cleanly isolated.

What remains most resistant is the problem of matter–antimatter pair creation and annihilation. Here, the theory appears internally consistent, while the experimental evidence, impressive as it is, still leaves a small but non-negligible margin of doubt — largely because of the indirect, assumption-laden nature of what is actually being measured. I do not know the experimental literature well enough to remove that last 5–10% of uncertainty, and I consider it a sign of good mental health not to pretend otherwise.

For now, that is where I leave it. Not as a conclusion, but as a calibration: knowing which questions have been clarified, and which ones deserve years — rather than posts — of further work.

3. Being precise on my use of AI: on cleaning up ideas, not outsourcing thinking

What AI did not do

Let me start with what AI did not do.

It did not:

- supply new experimental data,

- resolve open foundational problems,

- replace reading, calculation, or judgment,

- or magically dissolve the remaining hard questions in physics.

In particular, it did not remove my residual doubts concerning matter–antimatter pair creation. On that topic, I remain where I have been for some time: convinced that the theory is internally consistent, convinced that the experiments are impressive and largely persuasive, and yet unwilling to erase the remaining 5–10% of doubt that comes from knowing how indirect, assumption-laden, and instrument-mediated those experiments necessarily are. I still do not know the experimental literature well enough to close that last gap—and I consider it a sign of good mental health that I do not pretend otherwise.

What AI did do

What AI did do was something much more modest—and much more useful.

It acted as a moderator of instincts.

In the recent rewrites—most notably in this post (Cleaning Up After Bell)—AI consistently did three things:

- It cut through rhetorical surplus.

Not by softening arguments, but by separating epistemic claims from frustration, indignation, or historical irritation. - It forced distinctions.

Between mathematical theorems and their physical premises; between experimental closure and ontological interpretation; between criticism of ideas and criticism of institutions. - It preserved the spine while sharpening the blade.

The core positions did not change. What changed was their articulation: less adversarial, more intelligible, and therefore harder to dismiss.

In that sense, AI did not “correct” my thinking. It helped me re-express it in a way that better matches where I am now—intellectually and personally.

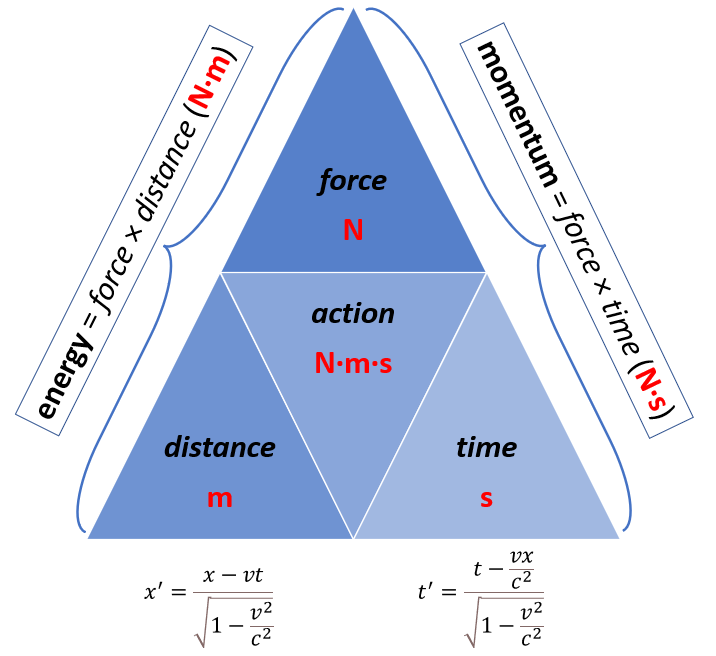

Two primitives or one?

A good illustration is the remaining disagreement between myself and my AI interlocutor on what is ultimately primitive in physics.

I still tend to think in terms of two ontological primitives: charge and fields—distinct, but inseparably linked by a single interaction structure. AI, drawing on a much broader synthesis of formal literature, prefers a single underlying structure with two irreducible manifestations: localized (charge-like) and extended (field-like).

Crucially, this disagreement is not empirical. It is ontological, and currently underdetermined by experiment. No amount of rhetorical force, human or artificial, can settle it. Recognizing that—and leaving it there—is part of intellectual maturity.

Why I am stopping (again)

I have said before that I would stop writing, and I did not always keep that promise. This time, however, the stopping point feels natural.

Most of the conceptual “knots” that bothered me in the contemporary discourse on physics have now been:

- either genuinely clarified,

- or cleanly isolated as long-horizon problems requiring years of experimental and theoretical work.

At this point, continuing to write would risk producing more words than signal.

There are other domains that now deserve attention: plain work, family projects, physical activity, and the kind of slow, tangible engagement with the world that no theory—however elegant—can replace.

Closing

If there is a single lesson from this episode, it is this:

AI is most useful not when it gives answers, but when it helps you ask what you are really saying—and whether you still stand by it once the noise is stripped away.

Used that way, it does not diminish thinking.

It disciplines it.

For now, that is enough.