Pre-scriptum (dated 26 June 2020): These posts on elementary math and physics for my kids (they are 21 and 23 now and no longer need such explanations) have not suffered much the attack by the dark force—which is good because I still like them. While my views on the true nature of light, matter and the force or forces that act on them have evolved significantly as part of my explorations of a more realist (classical) explanation of quantum mechanics, I think most (if not all) of the analysis in this post remains valid and fun to read. In fact, I find the simplest stuff is often the best. 🙂

Original post:

We’ve been juggling with a lot of advanced concepts in the previous post. Perhaps it’s time I write something that my kids can understand too. One of the things I struggled with when re-learning elementary physics is the concept of energy. What is energy really? I always felt my high school teachers did a poor job in trying to explain it. So let me try to do a better job here.

A high-school level course usually introduces the topic using the gravitational force, i.e. Newton’s Third Law: F = GmM/r2. This law states that the force of attraction is proportional to the product of the masses m and M, and inversely proportional to the square of the distance r between those two masses. The factor of proportionality is equal to G, i.e. the so-called universal gravitational constant, aka the ‘big G’ (G ≈ 6.674×10-11 N(m/kg)2), as opposed to the ‘little g’, which is the gravity of Earth (g ≈ 9.80665 m/s2). As far as I am concerned, it is at this point where my high-school teacher failed.

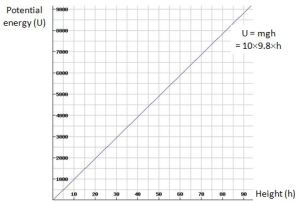

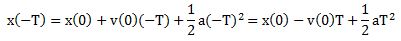

Indeed, he would just go on and simplify Newton’s Third Law by writing F = mg, noting that g = GM/r2 and that, for all practical purposes, this g factor is constant, because we are talking small distances as compared to the radius of the Earth. Hence, we should just remember that the gravitational force is proportional to the mass only, and that one kilogram amounts to a weight of about 10 newton (9.80665 kg·m/s2 (N) to be precise). That simplification would then be followed by another simplification: if we are lifting an object with mass m, we are doing work against the gravitational force. How much work? Well, he’d say, work is – quite simply – the force times the distance in physics, and the work done against the force is the potential energy (usually denoted by U) of that object. So he would write U = Fh = mgh, with h the height of the object (as measured from the surface of the Earth), and he would draw a nice linear graph like the one below (I set m to 10 kg here, and h ranges from 0 to 100 m).

Note that the slope of this line is slightly less than 45 degrees (and also note, of course, that it’s only approximately 45 degrees because of our choice of scale: dU/dh is equal to 98.0665, so if the x and y axes would have the same scale, we’d have a line that’s almost vertical).

So what’s wrong with this graph? Nothing. It’s just that this graph sort of got stuck in my head, and it complicated a more accurate understanding of energy. Indeed, with examples like the one above, one tends to forget that:

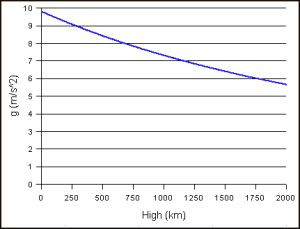

- Such linear graphs are an approximation only. In reality, the gravitational field, and force fields in general, are not uniform and, hence, g is not a constant: the graph below shows how g varies with the height (but the height is expressed in kilometer this time, not in meter).

- Not only is potential energy usually not a linear function but – equally important – it is usually not a positive real number either. In fact, in physics, U will usually take on a negative value. Why? Because we’re indeed measuring and defining it by the work done against the force.

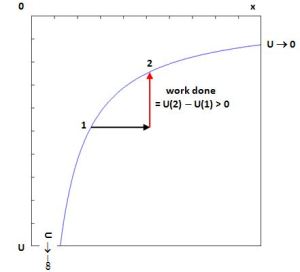

So what’s the more accurate view of things? Well… Let’s start by noting that potential energy is defined in relation to some reference point and, taking a more universal point of view, that reference point will usually be infinity when discussing the gravitational (or electromagnetic) force of attraction. Now, the potential energy of the point(s) at infinity – i.e. the reference point – will, usually, be equated with zero. Hence, the potential energy curve will then take the shape of the graph below (y = –1/x), so U will vary from zero (0) to minus infinity (–∞) , as we bring the two masses closer together. You can readily see that the graph below makes sense: its slope is positive and, hence, as such it does capture the same idea as that linear mgh graph above: moving a mass from point 1 to point 2 requires work and, hence, the potential energy at point 2 is higher than at point 1, even if both values U(2) and U(1) are negative numbers, unlike the values of that linear mgh curve.

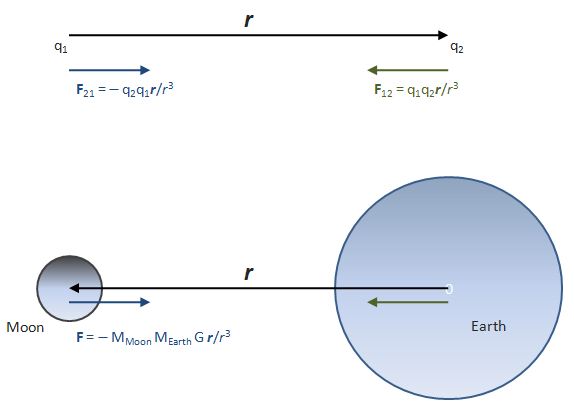

How do you get a curve like that? Well… I should first note another convention which is essential for making the sign come out alright: if the force is gravity, then we should write F = –GmMr/r3. So we have a minus sign here. And please do note the boldface type: F and r are vectors, and vectors have both a direction and magnitude – and so that’s why they are denoted by a bold letter (r), as opposed to the scalar quantities G, m, M or r).

Back to the minus sign. Why do we have that here? Well… It has to do with the direction of the force, which, in case of attraction, will be opposite to the so-called radius vector r. Just look at the illustration below, which shows, first, the direction of the force between two opposite electric charges (top) and then (bottom), the force between two masses, let’s say the Earth and the Moon.

So it’s a matter of convention really.

Now, when we’re talking the electromagnetic force, you know that likes repel and opposites attract, so two charges with the same sign will repel each other, and two charges with opposite sign will attract each other. So F12, i.e. the force on q2 because of the presence of q1, will be equal to F12 = q1q2r/r3. Therefore, no minus sign is needed here because q1 and q2 are opposite and, hence, the sign of this product will be negative. Therefore, we know that the direction of F comes out alright: it’s opposite to the direction of the radius vector r. So the force on a charge q2 which is placed in an electric field produced by a charge q1 is equal to F12 = q1q2r/r3. In short, no minus sign needed here because we already have one. Of course, the original charge q1 will be subject to the very same force and so we should write F21 = –q1q2r/r3. So we’ve got that minus sign again now. In general, however, we’ll write Fij = qiqjr/r3 when dealing with the electromagnetic force, so that’s without a minus sign, because the convention is to draw the radius vector from charge i to charge j and, hence, the radius vector r in the formula F21 would point in the other direction and, hence, the minus sign is not needed.

In short, because of the way that the electromagnetic force works, the sign always come out right: there is no need for a minus sign in front. However, for gravity, there are no opposite charges: masses are always alike, and so likes actually attract when we’re talking gravity, and so that’s why we need the minus sign when dealing with the gravitational force: the force between a mass i and another mass j will always be written as Fij = –mimjr/r3, so here we do have to put the minus sign, because the direction of the force needs to be opposite to the direction of the radius vector and so the sign of the ‘charges’ (i.e. the masses in this case), in the case of gravity, does not take care of that.

One last remark here may be useful: always watch out to not double-count forces when considering a system with many charges or many masses: both charges (or masses) feel the same force, but with opposite direction. OK. Let’s move on. If you are confused, don’t worry. Just remember that (1) it’s very important to be consistent when drawing that radius vector (it goes from the charge (or mass) causing the force field to the other charge (or mass) that is being brought in), and (2) that the gravitational and electromagnetic forces have a lot in common in terms of ‘geometry’ – notably that inverse proportionality relation with the square of the distance between the two charges or masses – but that we need to put a minus sign when we’re dealing with the gravitational force because, with gravitation, likes do not repel but attract each other, as opposed to electric charges.

Now, let’s move on indeed and get back to our discussion of potential energy. Let me copy that potential energy curve again and let’s assume we’re talking electromagnetics here, and that we’re have two opposite charges, so the force is one of attraction.

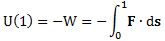

Hence, if we move one charge away from the other, we are doing work against the force. Conversely, if we bring them closer to each other, we’re working with the force and, hence, its potential energy will go down – from zero (i.e. the reference point) to… Well… Some negative value. How much work is being done? Well… The force changes all the time, so it’s not constant and so we cannot just calculate the force times the distance (Fs). We need to do one of those infinite sums, i.e. an integral, and so, for point 1 in the graph above, we can write:

Why the minus sign? Well… As said, we’re not increasing potential energy: we’re decreasing it, from zero to some negative value. If we’d move the charge from point 1 to the reference point (infinity), then we’d be doing work against the force and we’d be increasing potential energy. So then we’d have a positive value. If this is difficult, just think it through for a while and you’ll get there.

Now, this integral is somewhat special because F and s are vectors, and the F·ds product above is a so-called dot product between two vectors. The integral itself is a so-called path integral and so you may not have learned how to solve this one. But let me explain the dot product at least: the dot product of two vectors is the product of the magnitudes of those two vectors (i.e. their length) times the cosine of the angle between the two vectors:

F·ds =│F││ds│cosθ

Why that cosine? Well… To go from one point to another (from point 0 to point 1, for example), we can take any path really. [In fact, it is actually not so obvious that all paths will yield the same value for the potential energy: it is the case for so-called conservative forces only. But so gravity and the electromagnetic force are conservative forces and so, yes, we can take any path and we will find the same value.] Now, if the direction of the force and the direction of the displacement are the same, then that angle θ will be equal to zero and, hence, the dot product is just the product of the magnitudes (cos(0) = 1). However, if the direction of the force and the direction of the displacement are not the same, then it’s only the component of the force in the direction of the displacement that’s doing work, and the magnitude of that component is Fcosθ. So there you are: that explains why we need that cosine function.

Now, solving that ‘special’ integral is not so easy because the distance between the two charges at point 0 is zero and, hence, when we try to solve the integral by putting in the formula for F and finding the primitive and all that, you’ll find there’s a division by zero involved. Of course, there’s a way to solve the integral, but I won’t do it here. Just accept the general result here for U(r):

U(r) = q1q2/4πε0r

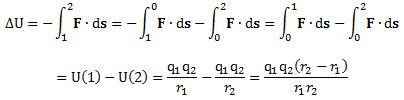

You can immediately see that, because we’re dealing with opposite charges, U(r) will always be negative, while the limit of this function for r going to infinity is equal to zero indeed. Conversely, its limit equals –∞ for r going to zero. As for the 4πε0 factor in this formula, that factor plays the same role as the G-factor for gravity. Indeed, ε0 is an ubiquitous electric constant: ε0 ≈ 8.854×10-12 F/m, but it can be included in the value of the charges by choosing another unit and, hence, it’s often omitted – and that’s what I’ll also do here. Now, the same formula obviously applies to point 2 in the graph as well, and so now we can calculate the difference in potential energy between point 1 and point 2:

Does that make sense? Yes. We’re, once again, doing work against the force when moving the charge from point 1 to point 2. So that’s why we have a minus sign in front. As for the signs of q1 and q2, remember these are opposite. As for the value of the (r2 – r1) factor, that’s obviously positive because r2 > r1. Hence, ΔU = U(1) – U(2) is negative. How do we interpret that? U(2) and U(1) are negative values, the difference between those two values, i.e. U(1) – U(2), is negative as well? Well… Just remember that ΔU is minus the work done to move the charge from point 1 to point 2. Hence, the change in potential energy (ΔU) is some negative value because the amount of work that needs to be done to move the charge from point 1 to point 2 is decidedly positive. Hence, yes, the charge has a higher energy level (albeit negative – but that’s just because of our convention which equates potential energy at infinity with zero) at point 2 as compared to point 1.

What about gravity? Well… That linear graph above is an approximation, we said, and it also takes r = h = 0 as the reference point but it assigns a value of zero for the potential energy there (as opposed to the –∞ value for the electromagnetic force above). So that graph is actually an linearization of a graph resembling the one below: we only start counting when we are on the Earth’s surface, so to say.

However, in a more advanced physics course, you will probably see the following potential energy function for gravity: U(r) = –GMm/r, and the graph of this function looks exactly the same as that graph we found for the potential energy between two opposite charges: the curve starts at point (0, –∞) and ends at point (∞, 0).

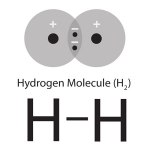

OK. Time to move on to another illustration or application: the covalent bond between two hydrogen atoms.

Application: the covalent bond between two hydrogen atoms

The graph below shows the potential energy as a function of the distance between two hydrogen atoms. Don’t worry about its exact mathematical shape: just try to understand it.

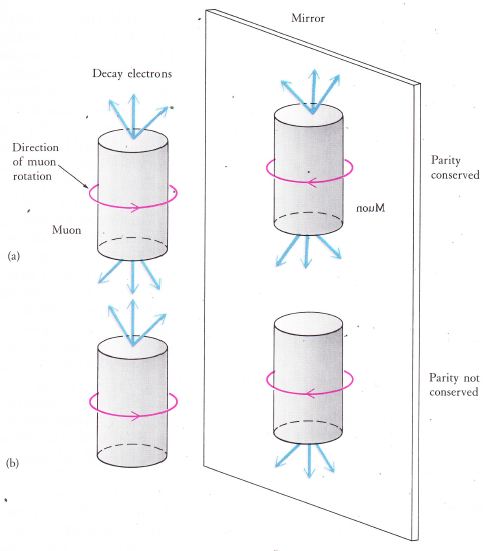

Natural hydrogen comes in H2 molecules, so there is a bond between two hydrogen atoms as a result of mutual attraction. The force involved is a chemical bond: the two hydrogen atoms share their so-called valence electron, thereby forming a so-called covalent bond (which is a form of chemical bond indeed, as you should remember from your high-school courses). However, one cannot push two hydrogen atoms too close, because then the positively charged nuclei will start repelling each other, and so that’s what is depicted above: the potential energy goes up very rapidly because the two atoms will repel each other very strongly.

The right half of the graph shows how the force of attraction vanishes as the two atoms are separated. After a while, the potential energy does not increase any more and so then the two atoms are free.

Again, the reference point does not matter very much: in the graph above, the potential energy is assumed to be zero at infinity (i.e. the ‘free’ state) but we could have chosen another reference point: it would only shift the graph up or down.

This brings us to another point: the law of energy conservation. For that, we need to introduce the concept of kinetic energy once again.

The formula for kinetic energy

In one of my previous posts, I defined the kinetic energy of an object as the excess energy over its rest energy:

K.E. = T = mc2 – m0c2 = γm0c2 – m0c2 = (γ–1)m0c2

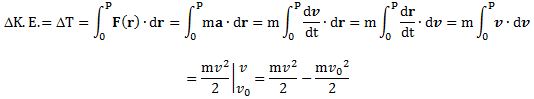

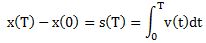

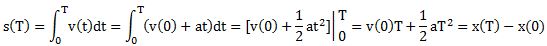

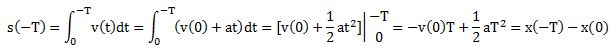

γ is the Lorentz factor in this formula (γ = (1–v2/c2)-1/2), and I derived the T = mv2/2 formula for the kinetic energy from a Taylor expansion of the formula above, noting that K.E. = mv2/2 is actually an approximation for non-relativistic speeds only, i.e. speeds that are much less than c and, hence, have no impact on the mass of the object: so, non-relativistic means that, for all practical purposes, m = m0. Now, if m = m0, then mc2 – m0c2 is equal to zero ! So how do we derive the kinetic energy formula for non-relativistic speeds then? Well… We must apply another method, using Newton’s Law: the force equals the time rate of change of the momentum of an object. The momentum of an object is denoted by p (it’s a vector quantity) and is the product of its mass and its velocity (p = mv), so we can write

F = d(mv)/dt (again, all bold letters denote vectors).

When the speed is low (i.e. non-relativistic), then we can just treat m as a constant and so we can write F = mdv/dt = ma (the mass times the acceleration). If m would not be constant, then we would have to apply the product rule: d(mv) = (dm/dt)v + m(dv/dt), and so then we would have two terms instead of one. Treating m as a constant also allows us to derive the classical (Newtonian) formula for kinetic energy:

So if we assume that the velocity of the object at point O is equal to zero (so vo = 0), then ΔT will be equal to T and we get what we were looking for: the kinetic energy at point P will be equal to T = mv2/2.

Energy conservation

Now, the total energy – potential and kinetic – of an object (or a system) has to remain constant, so we have E = T + U = constant. As a consequence, the time derivative of the total energy must equal zero. So we have:

E = T + U = constant, and dE/dt = 0

Can we prove that with the formulas T = mv2/2 and U = q1q2/4πε0r? Yes, but the proof is a bit lengthy and so I won’t prove it here. [We need to take the derivatives ∂T/∂t and ∂U/∂t and show that these derivatives are equal except for the sign, which is opposite, and so the sum of those two derivatives equals zero. Note that ∂T/∂t = (dT/dv)(dv/dt) and that ∂U/∂t = (dU/dr)(dr/dt), so you have to use the chain rule for derivatives here.] So just take a mental note of that and accept the result:

(1) mv2/2 + q1q2/4πε0r = constant when the electromagnetic force is involved (no minus sign, because the sign of the charges makes things come out alright), and

(2) mv2/2 – GMm/r = constant when the gravitational force is involved (note the minus sign, for the reason mentioned above: when the gravitational force is involved, we need to reverse the sign).

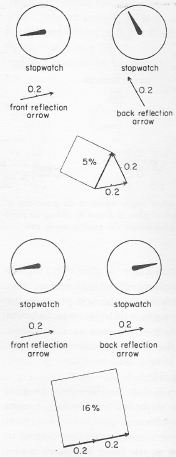

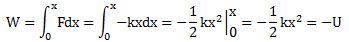

We can also take another example: an oscillating spring. When you try to compress a (linear) spring, the spring will push back with a force equal to F = kx. Hence, the energy needed to compress a (linear) spring a distance x from its equilibrium position can be calculated from the same integral/infinite sum formula: you will get U = kx2/2 as a result. Indeed, this is an easy integral (not a path integral), and so let me quickly solve it:

While that U = kx2/2 formula looks similar to the kinetic energy formula, you should note that it’s a function of the position, not of velocity, and that the formula does not involve the mass of the object we’re attaching to the string. So it’s a different animal altogether. However, because of the energy conservation law, the graph of both the potential and kinetic energy will obviously reflect each other, just like the energy graphs of a swinging pendulum, as shown below. We have:

T + U = mv2/2 + kx2/2 = C

Note: The graph above mentions an ‘ideal’ pendulum because, in reality, there will be an energy loss due to friction and, hence, the pendulum will slowly stop, as shown below. Hence, in reality, energy is conserved, but it leaks out of the system we are observing here: it gets lost as heat, which is another form of kinetic energy actually.

Another application: estimating the radius of an atom

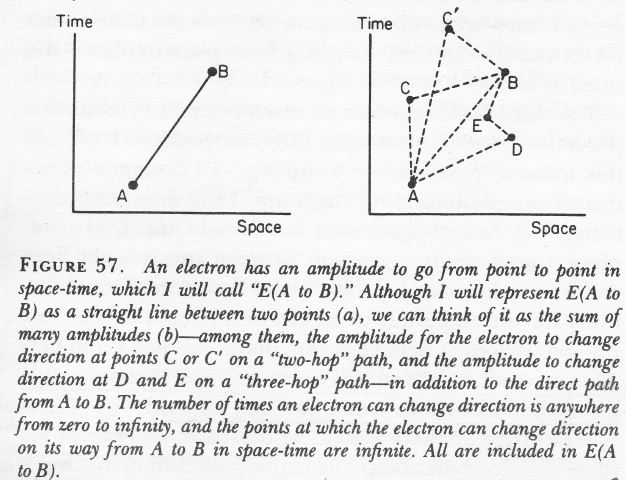

A very nice application of the energy concepts introduced above is the so-called Bohr model of a hydrogen atom. Feynman introduces that model as an estimate of the size (or radius) of an atom (see Feynman’s Lectures, Vol. III, p. 2-6). The argument is the following.

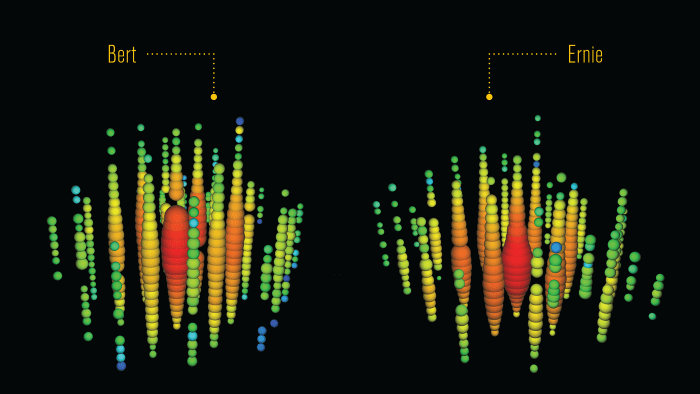

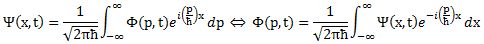

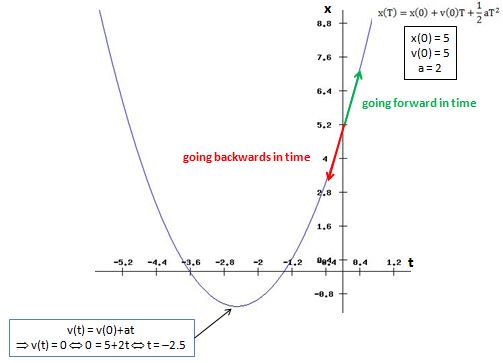

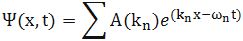

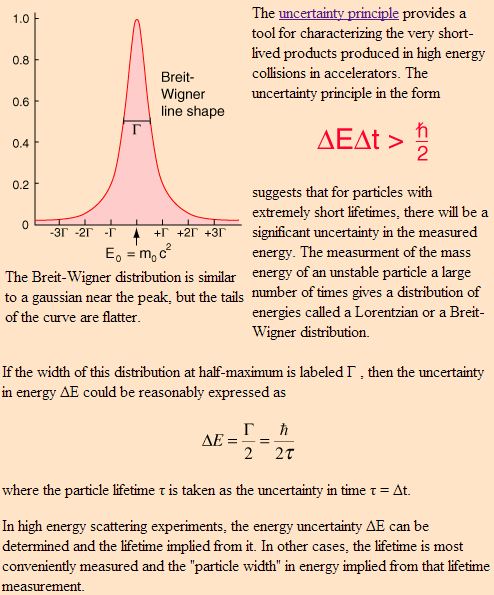

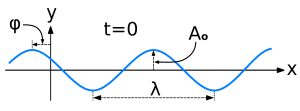

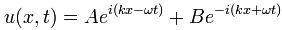

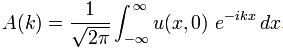

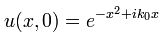

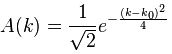

The radius of an atom is more or less the spread (usually denoted by Δ or σ) in the position of the electron, so we can write that Δx = a. In words, the uncertainty about the position is the radius a. Now, we know that the uncertainty about the position (x) also determines the uncertainty about the momentum (p = mv) of the electron because of the Uncertainty Principle ΔxΔp ≥ ħ/2 (ħ ≈ 6.6×10-16 eV·s). The principle is illustrated below, and in a previous posts I proved the relationship. [Note that k in the left graph actually represents the wave number of the de Broglie wave, but wave number and momentum are related through the de Broglie relation p = ħk.]

Hence, the order of magnitude of the momentum of the electron will – very roughly – be p ≈ ħ/a. [Note that Feynman doesn’t care about factors 2 or π or even 2π (h = 2πħ): the idea is just to get the order of magnitude (Feynman calls it a ‘dimensional analysis’), and that he actually equates p with p = h/a, so he doesn’t use the reduced Planck constant (ħ).]

Now, the electron’s potential energy will be given by that U(r) = q1q2/4πε0r formula above, with q1= e (the charge of the proton) and q2= –e (i.e. the charge of the electron), so we can simplify this to –e2/a.

The kinetic energy of the electron is given by the usual formula: T = mv2/2. This can be written as T = mv2/2 = m2v2/2m = p2/2m = h2/2ma2. Hence, the total energy of the electron is given by

E = T + U = h2/2ma2 – e2/a

What does this say? It says that the potential energy becomes smaller as a gets smaller (that’s because of the minus sign: when we say ‘smaller’, we actually mean a larger negative value). However, as it gets closer to the nucleus, it kinetic energy increases. In fact, the shape of this function is similar to that graph depicting the potential energy of a covalent bond as a function of the distance, but you should note that the blue graph below is the total energy (so it’s not only potential energy but kinetic energy as well).

I guess you can now anticipate the rest of the story. The electron will be there where its total energy is minimized. Why? Well… We could call it the minimum energy principle, but that’s usually used in another context (thermodynamics). Let me just quote Feynman here, because I don’t have a better explanation: “We do not know what a is, but we know that the atom is going to arrange itself to make some kind of compromise so that the energy is as little as possible.”

I guess you can now anticipate the rest of the story. The electron will be there where its total energy is minimized. Why? Well… We could call it the minimum energy principle, but that’s usually used in another context (thermodynamics). Let me just quote Feynman here, because I don’t have a better explanation: “We do not know what a is, but we know that the atom is going to arrange itself to make some kind of compromise so that the energy is as little as possible.”

He then calculates, as expected, the derivative dE/da, which equals dE/da = –h2/ma3 + e2/a2. Setting dE/da equal to zero, we get the ‘optimal’ value for a:

a0 = h2/me2 =0.528×10-10 m = 0.528 Å (angstrom)

Note that this calculation depends on the value one uses for e: to be correct, we need to put the 4πε0 factor back in. You also need to ensure you use proper and compatible units for all factors. Just try a couple of times and you should find that 0.528 value.

Of course, the question is whether or not this back-of-the-envelope calculation resembles anything real? It does: this number is very close to the so-called Bohr radius, which is the most probable distance between the proton and and the electron in a hydrogen atom (in its ground state) indeed. The Bohr radius is an actual physical constant and has been measured to be about 0.529 angstrom. Hence, for all practical purposes, the above calculation corresponds with reality. [Of course, while Feynman started with writing that we shouldn’t trust our answer within factors like 2, π, etcetera, he concludes his calculation by noting that he used all constants in such a way that it happens to come out the right number. :-)]

The corresponding energy for this value for a can be found by putting the value a0 back into the total energy equation, and then we find:

E0 = –me4/2h2 = –13.6 eV

Again, this corresponds to reality, because this is the energy that is needed to kick an electron out of its orbit or, to use proper language, this is the energy that is needed to ionize a hydrogen atom (it’s referred to as a Rydberg of energy). By way of conclusion, let me quote Feynman on what this negative energy actually means: “[Negative energy] means that the electron has less energy when it is in the atom than when it is free. It means it is bound. It means it takes energy to kick the electron out.”

That being said, as we pointed out above, it is all a matter of choosing our reference point: we can add or subtract any constant C to the energy equation: E + C = T + U + C will still be constant and, hence, respect the energy conservation law. But so I’ll conclude here and – of course – check if my kids understand any of this.

And what about potential?

Oh – yes. I forgot. The title of this post suggests that I would also write something on what is referred to as ‘potential’, and it’s not the same as potential energy. So let me quickly do that.

By now, you are surely familiar with the idea of a force field. If we put a charge or a mass somewhere, then it will create a condition such that another charge or mass will feel a force. That ‘condition’ is referred to as the field, and one represents a field by field vectors. For a gravitational field, we can write:

F = mC

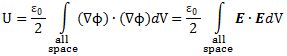

C is the field vector, and F is the force on the mass that we would ‘supply’ to the field for it to act on. Now, we can obviously re-write that integral for the potential energy as

U = –∫F·ds = –m∫C·ds = mΨ with Ψ (read: psi) = ∫C·ds = the potential

So we can say that the potential Ψ is the potential energy of a unit charge or a unit mass that would be placed in the field. Both C (a vector) as well Ψ (a scalar quantity, i.e. a real number) obviously vary in space and in time and, hence, are a function of the space coordinates x, y and z as well as the time coordinate t. However, let’s leave time out for the moment, in order to not make things too complex. [And, of course, I should not say that this psi has nothing to do with the probability wave function we introduced in previous posts. Nothing at all. It just happens to be the same symbol.]

Now, U is an integral, and so it can be shown that, if we know the potential energy, we also know the force. Indeed, the x-, y and z-component of the force is equal to:

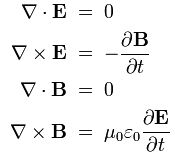

Fx = – ∂U/∂x, Fy = – ∂U/∂y, Fz = – ∂U/∂z or, using the grad (gradient) operator: F = –∇U

Likewise, we can recover the field vectors C from the potential function Ψ:

Cx = – ∂Ψ/∂x, Cy = – ∂Ψ/∂y, Cz = – ∂Ψ/∂z, or C = –∇Ψ

That grad operator is nice: it makes a vector function out of a scalar function.

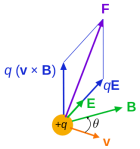

In the ‘electrical case’, we will write:

F = qE

And, likewise,

U = –∫F·ds = –q∫E·ds = qΦ with Φ (read: phi) = ∫E·ds = the electrical potential.

Unlike the ‘psi’ potential, the ‘phi’ potential is well known to us, if only because it’s expressed in volts. In fact, when we say that a battery or a capacitor is charged to a certain voltage, we actually mean the voltage difference between the parallel plates of which the capacitor or battery consists, so we are actually talking the difference in electrical potential ΔΦ = Φ1 – Φ2., which we also express in volts, just like the electrical potential itself.

Post scriptum:

The model of the atom that is implied in the above derivation is referred to as the so-called Bohr model. It is a rather primitive model (Wikipedia calls it a ‘first-order approximation’) but, despite its limitations, it’s a proper quantum-mechanical view of the hydrogen atom and, hence, Wikipedia notes that “it is still commonly taught to introduce students to quantum mechanics.” Indeed, that’s Feynman also uses it in one of his first Lectures on Quantum Mechanics (Vol. III, Chapter 2), before he moves on to more complex things.

Some content on this page was disabled on June 20, 2020 as a result of a DMCA takedown notice from Michael A. Gottlieb, Rudolf Pfeiffer, and The California Institute of Technology. You can learn more about the DMCA here:

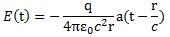

As for the constants in this formula, you know these by now: the speed of light c, the electron charge e, its mass me, and the permittivity of free space εe. For whatever it’s worth (because you should note that, in quantum mechanics, electrons do not have a size: they are treated as point-like particles, so they have a point charge and zero dimension), that’s small. It’s in the femtometer range (1 fm = 1

As for the constants in this formula, you know these by now: the speed of light c, the electron charge e, its mass me, and the permittivity of free space εe. For whatever it’s worth (because you should note that, in quantum mechanics, electrons do not have a size: they are treated as point-like particles, so they have a point charge and zero dimension), that’s small. It’s in the femtometer range (1 fm = 1

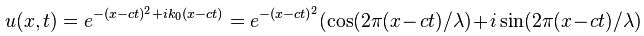

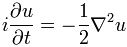

Now if we plug that in the equation above, we get our time-dependent Schrödinger equation:

Now if we plug that in the equation above, we get our time-dependent Schrödinger equation: